UNIFAI – UNIfied Framework for Ai Impact assessment

Introduction

Software engineering has long been a critical field of study, focusing on optimization problems and techniques for producing high-quality code. However, the advent of Artificial Intelligence (AI) has significantly altered the landscape of software development. The emphasis has shifted from traditional code-centric paradigms toward usability, user experience, and the emergence of knowledge-driven, code-agnostic development environments.

The paradox in modern AI development lies in the critical need to identify and control code quality and characteristics, despite the inherent complexity and opacity of many AI paradigms. AI systems, particularly those employing deep learning, often operate through multiple layers of abstraction, making the underlying processes and decision-making mechanisms non-transparent. This factor can obscure the quality and reliability of the code, leading to potential issues in performance, ethics, and compliance. Given this complexity, there is an “moral” obligation for designers to revisit fundamental questions about code evaluation and modeling. This involves not just understanding the technical aspects but also ensuring/evaluating that the AI systems align with ethical standards and societal values.

Limitations of Conventional AI Evaluation

The work on AI evaluation is not new; it has been a topic of research for over four decades. For instance, [1] provides a foundational analysis on how to evaluate AI research by extracting insights and setting goals at each stage of development. While such analysis may appear trivial at first glance, it offers critical insights into the foundational drivers of a concept’s necessity and reveals the underlying value systems it implicitly adheres to. This type of analysis is often underreported or entirely absent in the documentation and discussion of AI systems. Metrics such as task-specific accuracy, precision, and false negative rate are frequently highlighted as benchmarks for model performance [2][3]. However, the methodological foundations and contextual relevance of these metrics are occasionally communicated in detail. On top of that, in certain instances [4], additional tuning is applied not to improve the model’s inherent performance, but rather to enhance the perceived accuracy as experienced by end users—introducing an additional layer of abstraction that may obscure the system's actual behavior. In other cases, additional tuning is employed to enhance the perception of accuracy. This might involve adjusting models to better align with human expectations or to mitigate biases, thereby improving the system's perceived reliability. In summary, the development of AI systems needs a return to the moral code analysis, not only to ensure technical robustness but also to align and conform with ethical and societal expectations that are expected.

While these benchmarks serve as valuable indicators of success in controlled environments, they are insufficient for assessing the complexities and uncertainties of real-world deployments, particularly when they are not tested with real data or concepts relevant to the intended use case of the system. Similarly with the previous comparison, data represents another dimension of evaluation, especially through the leveraging of benchmark datasets. Although this approach can effectively demonstrate the accuracy of a model in comparison with known discrete concepts, it predominantly fails to reveal or adequately explore the "correctness" in real-world scenarios for decision making. Although such datasets enable the assessment of model accuracy against well-defined and discrete tasks [5][6][7][8], they frequently fall short in demonstrating—or even investigating—the model’s correctness and reliability in real-world decision-making contexts.

Societal Impact, Ethical Frameworks, and Enforcement Gaps

While performance-based evaluation methods remain prevalent, they offer a limited perspective on AI system assessment. This approach's dominance can be attributed to two primary factors: its effectiveness as a marketing strategy that emphasizes performance metrics, and its close alignment with economic considerations that typically drive enterprise decision-making. However, this narrow focus on performance may overlook other crucial aspects of AI system evaluation, such as ethical considerations, societal impact, and long-term sustainability. A growing body of literature has raised concerns regarding the widespread and accelerated deployment of AI algorithms and platforms in diverse aspects of everyday life [9][10][11][12]. Numerous research efforts have proposed strategies to mitigate the associated social and economic impacts [13][14][15][16], while standardization bodies [17] and international organizations [18][19][20][21] have issued frameworks and ethical guidelines aimed at supporting responsible AI deployment. However, these initiatives are largely advisory in nature, lacking enforceability, and often fall short of establishing binding rules or regulatory mechanisms for the development and use of AI systems.

Toward a Dynamic and Holistic Evaluation Framework

To address the growing need for comprehensive algorithmic oversight, an Algorithmic Impact Assessment (AIA) tool has been developed to offer a structured and holistic framework for evaluating AI systems. The tool is organized into six main categories, each designed to be completed sequentially—beginning with the initial planning stage and concluding with the withdrawal or retirement of the AI system or platform.

This tool extends and enriches the approach proposed by CapAI [22], as outlined in the CapAI Internal Review Protocol (IRP). It has been designed to align with the principles and regulatory requirements of the European Union Artificial Intelligence Act (AIA), ensuring that ethical, legal, and technical robustness is embedded throughout the AI lifecycle. While CapAI provides a strong foundation for documenting and supporting goals such as risk mitigation, trustworthiness, and accountability, this new assessment tool introduces key advancements. It places greater emphasis on user-friendliness and is built to be dynamically extensible, enabling contributions and updates from a wide range of stakeholders, including conformity assessment authorities, policymakers, researchers, AI experts, and the broader community. Contemporary AI paradigms exhibit significant variation in their foundational design principles, objectives, operational workflows, and modeling techniques. Approaches such as active learning, swarm intelligence, neuro-symbolic and other emerging paradigms are not developed according to a uniform framework, nor do they pursue identical outcomes or follow standardized developmental processes. This diversity of design logic underscores the dynamic and heterogeneous nature of AI system development. As a result, any static or rigid assessment framework risks becoming obsolete over time. The emergence of novel paradigms may necessitate the introduction of new evaluation criteria or even entirely new assessment categories, in order to adequately capture the nuances and specificities of these innovations.

Alignment with a Multimodal Regulatory and Ethical Environment

Acknowledging the growing complex and multimodal regulatory context of AI, UNIFAI is meant as not only a flexible tool, but also a compliance and adaptable framework. UNIFAI seeks to align with the main regulatory instruments of the European Union: The Artificial Intelligence Act, the Data Act, and the Data Governance Act. These laws address core requirements about data use, transparency, accountability, and risk mitigation and data use across the artificial intelligence ecosystem particularly for high-risk systems. UNIFAI embeds these obligations into its framework by embedding lifecycle-based documentation, risk tiering and governance by design (for the algorithm, the model and the data). In parallel, is inspired from HUDERIA methodology (Human Dignity, Democracy, and the Rule of Law)[23] as well as the recommendations of it[24], to ensure that human dignity, democracy and the rule of law are addressed throughout the AI development process. This is further supplemented by assessments such as the Human Rights AI Impact Assessment of Ontario[25] and the BSR (Business for Social Responsibility) Framework for a Human Rights-Based Approach to Impact Assessment[26] - both of which offer practical guidance on identifying, mitigating, and remedying harms associated with non-discrimination and equal treatment.

In addition to regional approaches, UNIFAI connects with globally recognized ethical standards and sustainability frameworks: the UNESCO Recommendation on the Ethics of Artificial Intelligence[27], the UN Guiding Principles on Business and Human Rights (UNGPs)[28], and the OECD Guidelines for Multinational Enterprises on Responsible Business Conduct[29]. Moreover adopts the IEEE Recommended Practice for Assessing the Impacts of Autonomous and Intelligent Systems on Human Well-Being[30] (IEEE Std 7010-2020) as the recommendations are useful insights to develop ethical and socially responsible frameworks. Notably, the IEEE 7010 standard has a multidimensional series of guiding questions that rigorously interrogate the potentiality of an AI intervention unsought or intended, to undermine or otherwise remove human autonomy, agency, and well-being. Moreover, to enhance the applied value, the IEEE 7010 framework includes illustrative examples across major AI use cases including facial recognition technology, automated hiring systems, and autonomous vehicles. These examples systematically map outcomes to stakeholders impacted by the AI intervention, providing an example for constructibility of the model for evaluating against stakeholder-specific outcomes. All of which serves dual value for UNIFAI as an additional information resource for its ethical oversight functions and for evaluating across the comprehensive, stakeholder-centered evaluation of the AI system and its impacts.

Positioning UNIFAI: A Comprehensive Benchmark Against 10 FRIA Frameworks

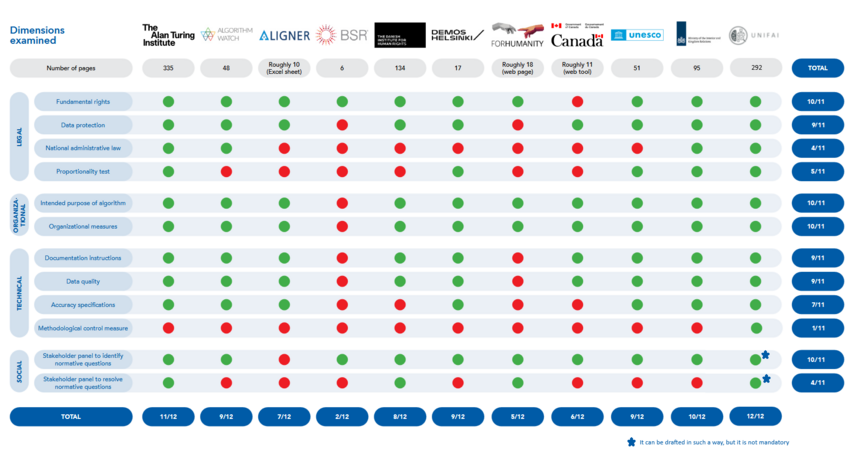

Following the in-depth analysis carried out by Algorithm Audit[31] - which assessed 10 current Fundamental Rights Impact Assessment (FRIA) frameworks against 12 significant requirements related to legal, organizational, technical and social dimensions - an assessment of rigor was performed in parallel with UNIFAI. As shown in the figure, UNIFAI meets all 12 requirements fully, this makes it one of the most comprehensive and adaptable frameworks currently available.

With respect to the social dimension, UNIFAI is architecturally capable of embedding comprehensive social considerations via its social consideration framework. The framework offers processes of stakeholder engagement, public deliberation, and the identification of normative conflicts that may emerge throughout the lifecycle of an AI system. While UNIFAI is architecturally designed to accommodate these aspects, they are not prescriptive or required. Rather, the framework offers a modular and contextual framework for implementation, permitting those implementing UNIFAI the opportunity to adjust the level of social considerations to the use case, regulatory environment, and stakeholder environment. This architecture provides with both scalability and flexibility, to use heavy social accountability processes where appropriate and lighten the burden on systems which may not require these processes as a matter of practicality or appropriateness.

References :

- ↑ Cohen, P. R., & Howe, A. E. (1988). How evaluation guides AI research: The message still counts more than the medium. AI magazine, 9(4), 35-35.

- ↑ Wei, J., Karina, N., Chung, H. W., Jiao, Y. J., Papay, S., Glaese, A., ... & Fedus, W. (2024). Measuring short-form factuality in large language models. arXiv preprint arXiv:2411.04368.

- ↑ Paul, S., & Chen, P. Y. (2022, June). Vision transformers are robust learners. In Proceedings of the AAAI conference on Artificial Intelligence (Vol. 36, No. 2, pp. 2071-2081).

- ↑ Kocielnik, R., Amershi, S., & Bennett, P. N. (2019, May). Will you accept an imperfect ai? exploring designs for adjusting end-user expectations of ai systems. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (pp. 1-14).

- ↑ Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009, June). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248-255). IEEE.

- ↑ Lin, T. Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., ... & Zitnick, C. L. (2014). Microsoft coco: Common objects in context. In Computer vision–ECCV 2014: 13th European conference, zurich, Switzerland, September 6-12, 2014, proceedings, part v 13 (pp. 740-755). Springer International Publishing.

- ↑ Harish, B. S., Kumar, K., & Darshan, H. K. (2019). Sentiment analysis on IMDb movie reviews using hybrid feature extraction method.

- ↑ Asghar, N. (2016). Yelp dataset challenge: Review rating prediction. arXiv preprint arXiv:1605.05362.

- ↑ Al-kfairy, M., Mustafa, D., Kshetri, N., Insiew, M., & Alfandi, O. (2024, September). Ethical challenges and solutions of generative AI: An interdisciplinary perspective. In Informatics (Vol. 11, No. 3, p. 58). Multidisciplinary Digital Publishing Institute.

- ↑ Wei, M., & Zhou, Z. (2022). Ai ethics issues in real world: Evidence from ai incident database. arXiv preprint arXiv:2206.07635.

- ↑ Baldassarre, M. T., Caivano, D., Fernandez Nieto, B., Gigante, D., & Ragone, A. (2023, September). The social impact of generative ai: An analysis on chatgpt. In Proceedings of the 2023 ACM Conference on Information Technology for Social Good (pp. 363-373).

- ↑ Padhi, I., Dognin, P., Rios, J., Luss, R., Achintalwar, S., Riemer, M., ... & Bouneffouf, D. (2024, August). Comvas: Contextual moral values alignment system. In Proc. Int. Joint Conf. Artif. Intell (pp. 8759-8762).

- ↑ Mbiazi, D., Bhange, M., Babaei, M., Sheth, I., & Kenfack, P. J. (2023). Survey on AI Ethics: A Socio-technical Perspective. arXiv preprint arXiv:2311.17228.

- ↑ Díaz-Rodríguez, N., Del Ser, J., Coeckelbergh, M., de Prado, M. L., Herrera-Viedma, E., & Herrera, F. (2023). Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Information Fusion, 99, 101896.

- ↑ Shavit, Y., Agarwal, S., Brundage, M., Adler, S., O’Keefe, C., Campbell, R., ... & Robinson, D. G. (2023). Practices for governing agentic AI systems. Research Paper, OpenAI.

- ↑ Díaz-Rodríguez, N., Del Ser, J., Coeckelbergh, M., de Prado, M. L., Herrera-Viedma, E., & Herrera, F. (2023). Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Information Fusion, 99, 101896.

- ↑ Schiff, D., Ayesh, A., Musikanski, L., & Havens, J. C. (2020, October). IEEE 7010: A new standard for assessing the well-being implications of artificial intelligence. In 2020 IEEE international conference on systems, man, and cybernetics (SMC) (pp. 2746-2753). IEEE.

- ↑ UNESCO. (2021). Recommendation on the Ethics of Artificial Intelligence. United Nations Educational, Scientific and Cultural Organization. https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

- ↑ International Organization for Standardization & International Electrotechnical Commission. (2022). ISO/IEC 22989:2022 – Artificial intelligence — Artificial intelligence concepts and terminology. ISO. https://www.iso.org/standard/74296.html

- ↑ International Organization for Standardization & International Electrotechnical Commission. (2023). ISO/IEC 23894:2023 – Artificial intelligence — Guidance on risk management. ISO. https://www.iso.org/standard/77608.html

- ↑ Council of Europe. (2024). Framework Convention on Artificial Intelligence and Human Rights, Democracy and the Rule of Law. Strasbourg, France.

- ↑ Whittlestone, J., Nyrup, R., Alexandrova, A., & Cave, S. (2021). The CapAI framework: Assessing the capability of AI systems. Centre for the Governance of AI.

- ↑ https://rm.coe.int/cai-2024-16rev2-methodology-for-the-risk-and-impact-assessment-of-arti/1680b2a09f

- ↑ https://ecnl.org/sites/default/files/2021-11/HUDERIA%20paper%20ECNL%20and%20DataSociety.pdf

- ↑ https://www3.ohrc.on.ca/sites/default/files/Human%20Rights%20Impact%20Assessment%20for%20AI.pdf

- ↑ https://www.bsr.org/files/BSR-A-Human-Rights-Based-Approach-to-Impact-Assessment.pdf

- ↑ https://www.unesco.org/en/articles/recommendation-ethics-artificial-intelligence

- ↑ https://www.ohchr.org/sites/default/files/documents/publications/guidingprinciplesbusinesshr_en.pdf

- ↑ https://www.oecd.org/en/publications/oecd-guidelines-for-multinational-enterprises-on-responsible-business-conduct_81f92357-en.html

- ↑ IEEE Standards Committee. (2020). IEEE Recommended Practice for Assessing the Impact of Autonomous and Intelligent Systems on Human Well-Being: IEEE Standard 7010-2020. IEEE.

- ↑ 31.0 31.1 https://algorithmaudit.eu/knowledge-platform/knowledge-base/comparative_review_10_frias/